Could the miscalculation of four OpenAI board members doom humanity?

Also, download anything from ChatGPT that you'd be really sad to lose

Even for people whose full-time job is creating social media content about AI are struggling to keep up with the OpenAI debacle right now. Here’s a screenshot of the sidebar from The Verge earlier today:

I normally consider it a victory to get out one post every two weeks, I posted “OpenAI’s board fired Sam Altman. What does it mean?” just 2.5 days ago, and this week I’m also caring for my 6yo and 4yo who are on day 8 of testing positive for COVID. Although I will say, I can’t imagine a more diverting series of interruptions between wiping butts and noses:

In any case, I assume could be entirely outpaced by events by the time anyone reads this, and I’ll hope to write another post in a few days with an entirely new analysis (if I remain COVID-free myself!) But here goes nothing, for now:

What is even happening?

I can’t possibly summarize everything that has happened since my previous post. Here’s the best attempt at a timeline of the past few days that I’ve found so far (again, probably wildly out of date by the time you read this!). Or you could also just watch this hilarious meme video.

If you want backstory, I’d highly recommend this deeply reported article in the Atlantic: Inside the Chaos at OpenAI: Sam Altman’s weekend of shock and drama began a year ago, with the release of ChatGPT.

Altman’s dismissal by OpenAI’s board on Friday was the culmination of a power struggle between the company’s two ideological extremes—one group born from Silicon Valley techno-optimism, energized by rapid commercialization; the other steeped in fears that AI represents an existential risk to humanity and must be controlled with extreme caution. For years, the two sides managed to coexist, with some bumps along the way.

This tenuous equilibrium broke one year ago almost to the day, according to current and former employees, thanks to the release of the very thing that brought OpenAI to global prominence: ChatGPT.

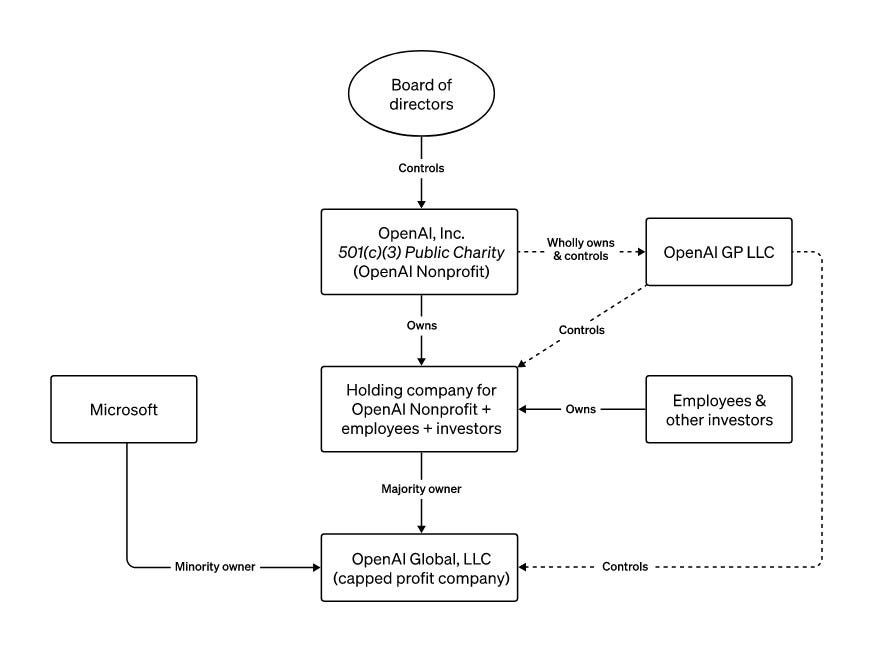

The LessWrong timeline also contains this graphic that is better than the last one I shared about OpenAI’s corporate structure:

(BTW, one question I haven’t seen addressed anywhere is: Do the OpenAI GP LLC and/or the holding company have their own boards of directors, separate from the c(3) charity’s board?)

The (catastrophic?) inexorability of the profit motive

No one even knows who will be the CEO of OpenAI tomorrow, let alone all the ramifications of this complete clusterf*ck.

But the one conclusion I’m sure of right now is that this is going to turn out to be a win for capitalism. As I mentioned in my last post, both OpenAI and Anthropic have unorthodox corporate structures designed to prioritize humanist values over capitalist orthodoxy. Per the Atlantic article I linked to above:

OpenAI was deliberately structured to resist the values that drive much of the tech industry—a relentless pursuit of scale, a build-first-ask-questions-later approach to launching consumer products. It was founded in 2015 as a nonprofit dedicated to the creation of artificial general intelligence, or AGI, that should benefit “humanity as a whole.” (AGI, in the company’s telling, would be advanced enough to outperform any person at “most economically valuable work”—just the kind of cataclysmically powerful tech that demands a responsible steward.) In this conception, OpenAI would operate more like a research facility or a think tank. The company’s charter bluntly states that OpenAI’s “primary fiduciary duty is to humanity,” not to investors or even employees.

This is the first and only time that a major AI company’s mission-first structure has been put to the test, and it’s clear that it will have failed that test in one way or another. For example, take the following scenarios, which seem the most likely to me right now:

Sam Altman goes to Microsoft. Almost the entire OpenAI staff quits, most go to Microsoft and some scatter to other companies. Microsoft stops providing compute to OpenAI. OpenAI the nonprofit now has neither human capital nor the ability to run its own models, let alone create new ones. The next big advances in AI are much more likely to take place within companies without any kind of mission-driven structure, where shareholder profit is the goal.

The entire remaining board of OpenAI is forced to resign, and is replaced by a board of normal tech bros, approved by Microsoft. Sam and rest of the team return to OpenAI, without any check on their instincts towards growth and speed at the expense of the safety of humanity.

Either way, the current board of OpenAI (one of whose main mandates is to stop dangerous AI!) will be powerless to stop dangerous AI. And what’s more, no one will ever try to make a weird corporate structure like OpenAI’s again, because they won’t be able to get sufficient investment.

The inexorable truth is this: In order to build powerful AI, you need lots of capital. Right now, the only source of that much capital is private, profit-seeking investment. And once you take that kind of capital, capitalism has a way of bending you to its whims.

In order to build powerful AI, you need lots of capital. Right now, the only source of that much capital is private, profit-seeking investment. And once you take that kind of capital, capitalism has a way of bending you to its whims.

So while I can’t say I’m surprised, I still find it disappointing — and scary — that the idea of mission-oriented accountability for cutting-edge AI is probably dead. If the doomers turn out to be right and humanity does suffer extinction at the hands of AI, the OpenAI board’s overplaying of its hand four days ago might go down in the bots’ memory banks as the deeply ironic, pivotal moment that doom became inevitable.

Your homework: Download anything you want from ChatGPT

If you’re not a heavy ChatGPT user, there is probably no immediate action you should take related to OpenAI other than try to stop your obsessive Twitter/X scrolling and pay attention to your family (coughcoughsorryTuck&Astracough).

But if you are: I can’t believe I’m saying this, but it is now within the realm of possibility that ChatGPT will simply stop working at some point in the next week or two, if all the OpenAI staff leave and/or Microsoft cuts off OpenAI from its cloud computing resources. So I personally just went through and found the dozen conversations I had had with ChatGPT that I would be most sad to lose, and copied them into a Google doc. You might want to consider the same.

Finally, my vote for winning Tweet (X?) of the day today, which applies to me staying up right now to write this, as well as to at least a few dozen of my friends:

So with that, good night!