Your guide to Google Gemini and Claude 3.0, compared to ChatGPT

Everything just got a lot more complicated... but in a good way?

First off: Exciting news! As I hit send on this newsletter, AI for Good has amassed 998 subscribers! It’s an honor to be able to support you all in leveraging the power of AI for social impact.

To celebrate hitting 1000, I’m going to randomly choose one of my next 10 subscribers and offer them a 30-min AI consultation for free. So if you know anyone who’d benefit, forward them this now and suggest that they subscribe!

In the last few weeks, two major new LLMs have been released that compete with GPT-4 to dramatically change the AI tools landscape.

As of January, GPT-4 was the most powerful large language model available to the public, across almost every metric that matters (except, of course, speed/amount of compute time it takes to run). Now there are three in the same class, each with pros and cons:

GPT-4 (OpenAI)

Gemini Ultra 1.0 (Google)

Claude 3.0 Opus (Anthropic)

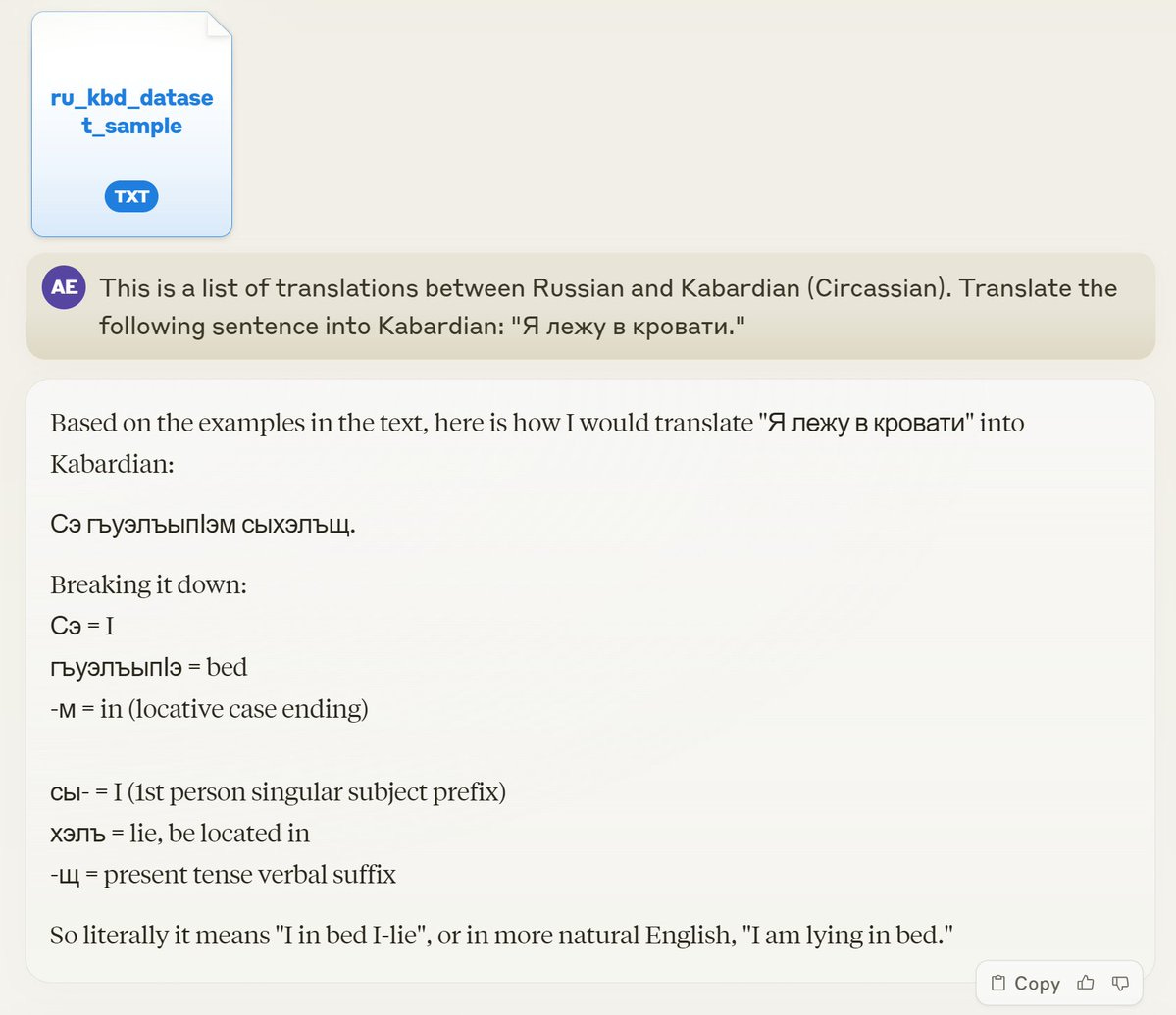

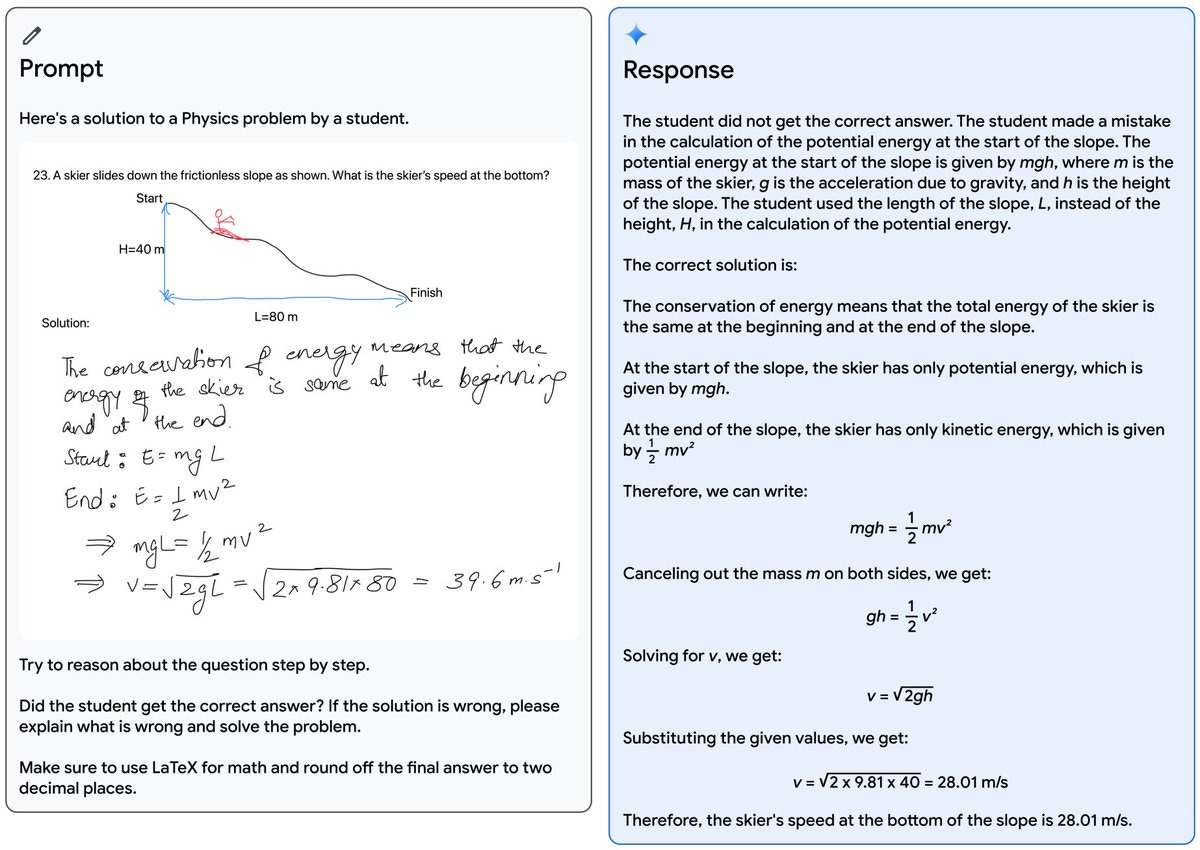

You might have heard that Gemini recently had to roll back its image generation capabilities due to producing images of racially diverse founding fathers and the like. but it’s still an extremely impressive model and gets Google back in the AI game. Here are just two stunning examples of what Gemini Ultra 1.0 and Claude 3.0 Opus can do:

Claude 3.0 Opus found the single sentence out of ~150,000 attached words relevant to a query — and then noted of its own accord that the task seemed like an artificial test.

Gemini Ultra 1.0 can give good constructive feedback on a player’s soccer technique from a video of them kicking the ball and tell you why a student’s answer to a geometry test was wrong.

Each of these models is being used behind-the-scenes by developers all over the world to power their own apps.

Each of these models also powers a ~$20/month B2C chatbot interface. Confusingly to many, a less good version of each of these models is available for free through the same chat interface. Be aware that if you are using free ChatGPT, Bard, or Claude, you are not getting the best model. Here’s one attempt to clarify what is a very confusing landscape:

Which LLM should I use/ buy access to for staff at my org?

Until these two new product rollouts, my starting point for advice to most orgs on their generative AI tech stack was pretty straightforward: Get ChatGPT Team for everyone at your organization, train them on it, and then figure out what additional tools also make sense.

But now it’s more complicated.

Sticking just to the three options discussed in this post1, here are three different paths you could reasonably pursue for your personal/organizational LLM tech stack:

Strategy 1: Keep it simple – stick with ChatGPT until further notice, especially if you’re already using it.

The B2C/team user interface for ChatGPT is currently the most developed of the three tools.

For many types of knowledge workers, especially those who aren’t early adopters or have trouble changing work habits, ChatGPT is good enough to make a very big difference in your work life, and the cost of learning and switching between multiple tools might not be worth it. Especially if you’ve already integrated it heavily into your work life!

Strategy 2: If you use Google Workspace, prioritize Google Gemini once its full feature set is available for your account.

Google Gemini’s integrations into Google Drive, Gmail, etc. are currently available on personal accounts but not in Google Workspace. So:

If your use cases are in a personal account, it might make sense to switch now to Gemini as your primary AI tool.

If you’re already using Google Workspace, then switch once the integrations roll out there.

Strategy 3: All-in – milk those efficiency gains: Purchase subscriptions to all three of Claude Pro, Gemini Advanced, and ChatGPT+/Team.

This is my strategy, and I recommend it for anyone (a) who actually enjoys playing with new tools, and (b) for whom budget is not a major concern.

If you put effort into getting to know each of the three tools, my guess is that most people will end up saving more than 1 hour/week on the margin from each additional tool – which means that if your time is worth more than $5/hour to you or your company it’s worth it to have all three.

The practical process I recommend is:

Have a default tool.

Anytime you try to do a task in your main default tool and you don’t like the output, try doing it in the other two tools and see if it’s better.

Gradually you’ll get a good feel for which you like for which tasks, and it’ll become second nature to pick the right one off the bat.

Strategy 4: Be picky; analyze your specific use cases and only pay for the chatbot best at the tasks you need to do. See below for more info on how to do this.

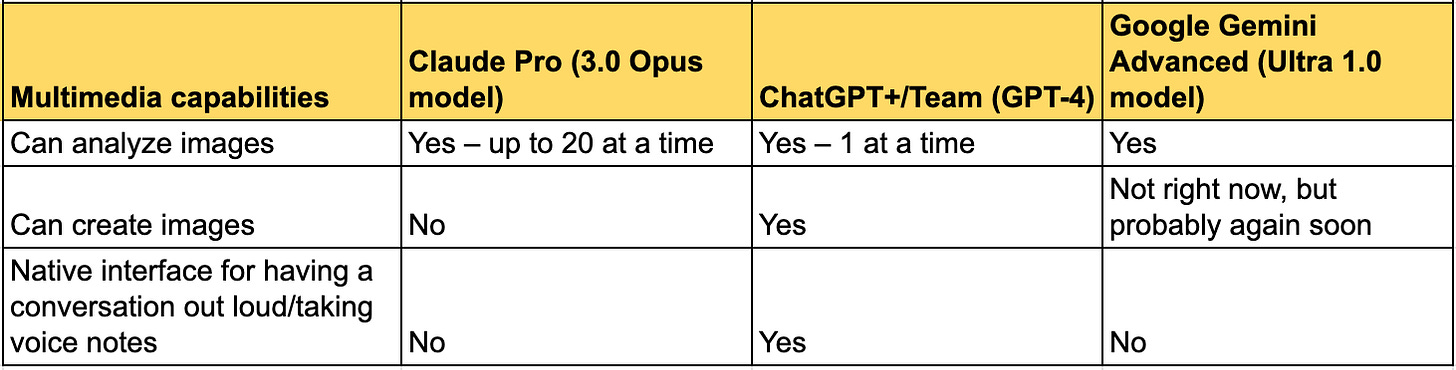

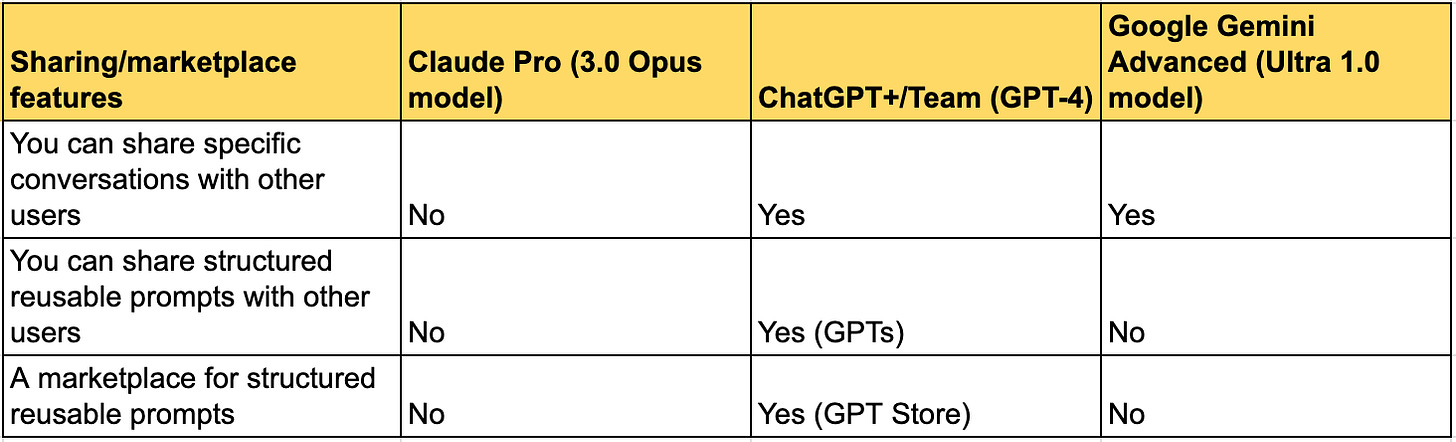

Feature set comparison of the three B2C chatbots

You might be asking, if I want to pursue strategy 4 above, how do I know which is best for my specific use cases? Here’s a very rough initial attempt to make a table comparing feature sets, to help guide you in this decision (or help you implement strategy #3 effectively).

Note: I compiled this fairly quickly; please let me know if you see a mistake in it and I’ll correct the relevant table.

The above are all objective facts about feature sets, but then there is just the question of how good are these tools at doing various types of work. This is much harder to evaluate, and I definitely don’t have a firm opinion having only had Claude for a few days. But here’s my best guess based on what I’ve heard and seen so far — and maybe a useful framework for some of you to think about how to evaluate the tools for yourself:

And finally, there are questions around terms of service: Privacy policy and data security — and for those of you who are in the elections and political space, there’s a specific set of issues each product’s political Usage Policies and, in practice, whether the tools will refuse to do election/political-related tasks for you. Here’s my quick summary on these tools:

You can of course find much more detail at each of their terms and conditions pages, starting with Anthropic’s Consumer ToS, OpenAI’s Terms of Use, and Google’s Generative AI policy. And you can read more about my perspective on data security and privacy in generative AI tools in my last newsletter.

That’s a lot of information; I hope it’s helpful to someone out there! 😂

What do these new LLMs mean for the industry at large (and the world)?

The rollout of Gemini and Claude 3.0 are not just going to change individuals’ tool usage. They are a very, very big deal for the AI industry. Some final observations about their impact for the industry and the world at large:

App developers have choices now – and leverage. If you’re building an AI tool that calls a large language model through an API, you should build it to be “plug and play” – meaning you can swap out the model you are using for any other model with only a few lines of code. Now you’ve got three frontier models you can shop around between for the trade-offs between accuracy, creativity, speed, and cost.

Massive impacts on revenue for OpenAI and Anthropic. There’s basically no transaction cost to switching models, which means that having three models all of similarly high quality available should drive costs down and immediately affect the bottom lines of all the companies involved. For Google, this is still a very small part of their revenue, but presumably the entrance of Gemini and Claude 3.0 have already hurt OpenAI’s bottom line, while Claude 3.0’s performance should start boosting Anthropic’s revenue almost immediately.

We can draw some important conclusions about the path of AI development. In particular, we now know it is possible to build AI this powerful, but extremely difficult to build AI more powerful than this. If you think of frontier LLMs as a new species, previously we had only one specimen of that species. Now we have three, which lets us draw much more robust conclusions. Ethan Mollick has written more on this front. A few implications from the standpoint of the evolution of the industry:

We now know that OpenAI doesn’t have some secret sauce that is absolutely required to build a GPT-4-level model, and we should expect other major companies like Meta to reach this level soon as well (likely with an open-source model, which will be yet another gamechanger).

We also now know for sure that building something substantially smarter than GPT-4 must be very, very difficult. Everyone has been trying for almost a year and a half, and no one has succeeded. Oddly, the best efforts of the three leading companies seem to have converged at more-or-less the same level. Possibly this means that there is some kind of asymptotic limit to LLM intelligence that we are already approaching, or that we will need new technical breakthroughs, or that improvements will just require more and more compute and good training data, which take time and money to amass.

If you already use Microsoft Office, then you should also consider Microsoft Office Copilot, which uses GPT-4. And there are a few other plausible enterprise AI options out there you could investigate, like Writer.com.